This page provides a brief outline of the null space algorithm for nonlinear constrained optimization developped in full details in [11] Feppon, F., Allaire, G. and Dapogny, C. Null space gradient flows for constrained optimization with applications to shape optimization (2020). ESAIM: COCV, 26 90 (Open Access). HAL preprint hal-01972915.

(abstract)

(bibtex)

https://gitlab.com/florian.feppon/null-space-optimizer.

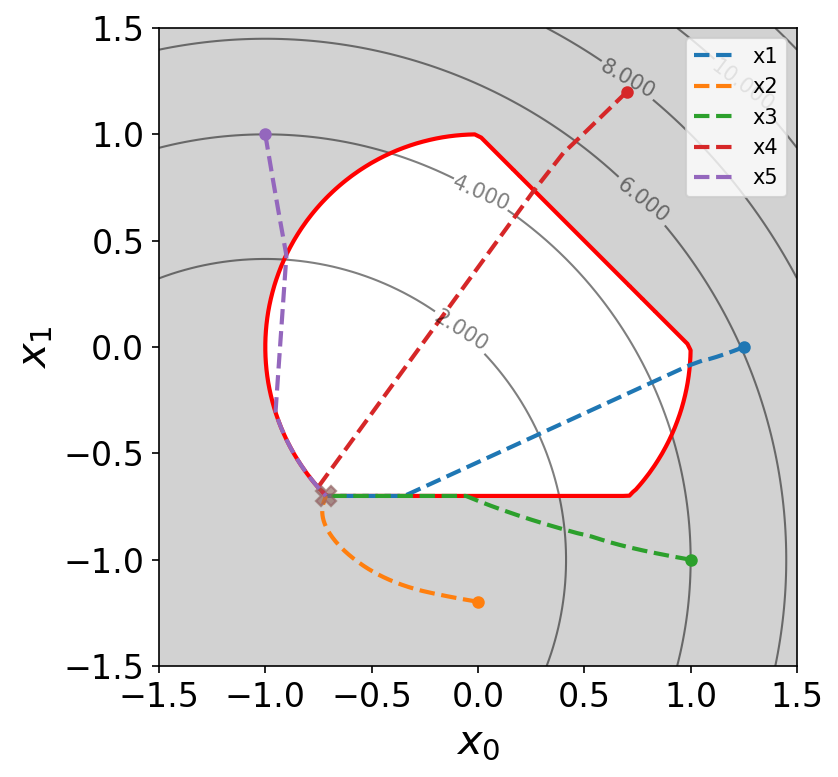

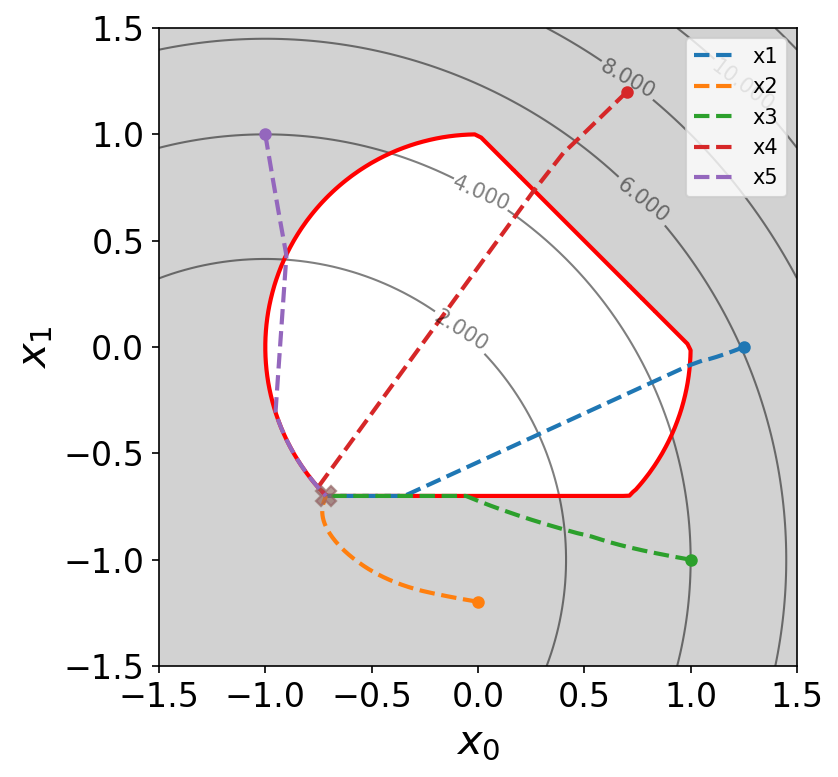

Some optimization trajectories obtained with the open-source implementation of our algorithm on a demonstrative test case.

This algorithm allows, in principle, to solve arbitrary nonlinear constrained optimization problems of the form \[ \newcommand{\x}{{\bf x}} \newcommand{\g}{{\bf g}} \newcommand{\<}{\leqslant} \newcommand{\R}{\mathbb{R}} \newcommand{\h}{{\bf h}} \begin{aligned} \min_{\x\in V}& \quad J(\x)\\ \textrm{s.t.} & \left\{\begin{aligned} \g(\x)&=0\\ \h(\x)&\<0, \end{aligned}\right. \end{aligned} \label{eqn:equation} \tag{1} \] where \(V\) is the optimization set, \(J\,:\,V\to \R\) is the objective function and \(\g\,:\,V\rightarrow \R^p\) and \(\h\,:\,V\rightarrow \R^q\) are respectively the respectively the equality and inequality constraint functionals.

The basis of the method is to solve an Ordinary Differential Equation (so-called ‘’null-space gradient flow’’), \[\begin{equation} \label{eqn:ODE} \dot{x}(t) =-\alpha_J \xi_J(x(t))-\alpha_C\xi_C(x(t)), \end{equation}\] which is able to solve the optimization problem \(\eqref{eqn:equation}\).

The direction \(\xi_J(x(t))\) is called the null space direction, it is the ‘’best’’ locally feasible descent direction for minimizing \(J\) and respecting the constraints. The direction \(\xi_C(x(t))\) is called the range space direction, it makes the violated constraints better satisfied and corrects unfeasible initializations.

The null space direction \(\xi_J(x)\) can be interpreted as a generalization of the gradient in presence of equality and inequality constraints. It is defined as positively proportional to the solution of the following minimization problem: \[\begin{aligned} & \min_{\xi\in V} \quad\mathrm{D}J(x)\xi\\ & \textrm{s.t. } \left\{\begin{aligned} \mathrm{D}\g(x)\xi &= 0\\ \mathrm{D}\h_{\widetilde{I\,}(x)}(x)\xi& \<0\\ ||\xi||_V & \< 1. \end{aligned}\right. \end{aligned} \] where \(\widetilde{I\,}(x)\) is the set of violated or saturated constraints: \[ \widetilde{I\,}(x):=\{i\in \{1,\dots,q\}\,|\, h_i(x)\geqslant 0\}.\] This minimization problem always has a solution. When the number of constraints is not too large, \(\xi_J(x)\) can be computed efficiently by mean of a dual subproblem.

The range space direction \(\xi_C(x)\) is a Gauss-Newton direction for cancelling the violated constraints of \(\widetilde{I\,}(x)\): \[ \newcommand{\CC}{\mathrm{C}} \xi_C(x):=\mathrm{D}\CC_{\widetilde{I\,}(x)}^T(\mathrm{D}\CC_{\widetilde{I\,}(x)} \mathrm{D}\CC_{\widetilde{I\,}(x)}^T)^{-1}\CC_{\widetilde{I\,}(x)}(x), \textrm{ with } \CC_{\widetilde{I\,}}(x):=\begin{bmatrix} \g(x) & \h_{\widetilde{I\,}(x)}(x)\end{bmatrix}^T,\, \h_{\widetilde{I\,}(x)}(x):=(h_i(x))_{i \in \widetilde{I\,}(x)}. \] It is orthogonal to \(\xi_J(x)\) and satisfies \(\mathrm{D}\CC_{\widetilde{I\,}(x)}\xi_C(x)=\CC_{\widetilde{I\,}(x)}(x)\) which entails an exponential decrease of the violation of the constraints at rate at least \(e^{-\alpha_C t}\) for the solution \(x(t)\) of : \[\forall t\geqslant 0,\, \g(x(t))= \g(x(0)) e^{-\alpha_C t}\textrm{ and } \h(x(t)) \< \max(0,\h(x(0))e^{-\alpha_C t}.\]

The algorithm proves very convenient to solve shape optimization test cases, because it does not require to tune meta-parameters (such as penalization parameters) difficult to guess for the user. This algorithm has been used for all our 2-D and 3-D topology optimization test cases.

Let us reproduce below the multiple load bridge test case of our paper [11]. We minimize the volume subject to up to 9 rigidity constraints corresponding to up to 9 different load test cases: \[ \newcommand{\u}{{\bf{u}}} \newcommand{\n}{\bf n} \newcommand{\D}{\mathrm{d}} \renewcommand{\div}{\mathrm{div}} \begin{aligned} \min_{\Gamma} & \quad \int_{\Omega_s} \D x \\ s.t. & \left\{ \begin{aligned} \int_{\Omega_s} Ae(\u_i):e(\u_i)\D x & \< C\textrm{ for }i=1\dots 9 \\ -\div(Ae(\u_i)) & = 0 \textrm{ in }\Omega_s\\ Ae(\u_i)\cdot\n& = \bf g_i \textrm{ on }\Gamma_i\textrm{ for }i=1\dots 9\\ Ae(\u_i)\cdot\n &= 0\textrm{ on } \Gamma\textrm{ for }i=1\dots 9, \end{aligned}\right. \end{aligned}.\] As the number of loads considered increase, the optimization path generates additional supporting bars for rigidity

One load only.

Three loads.

All nine loads.

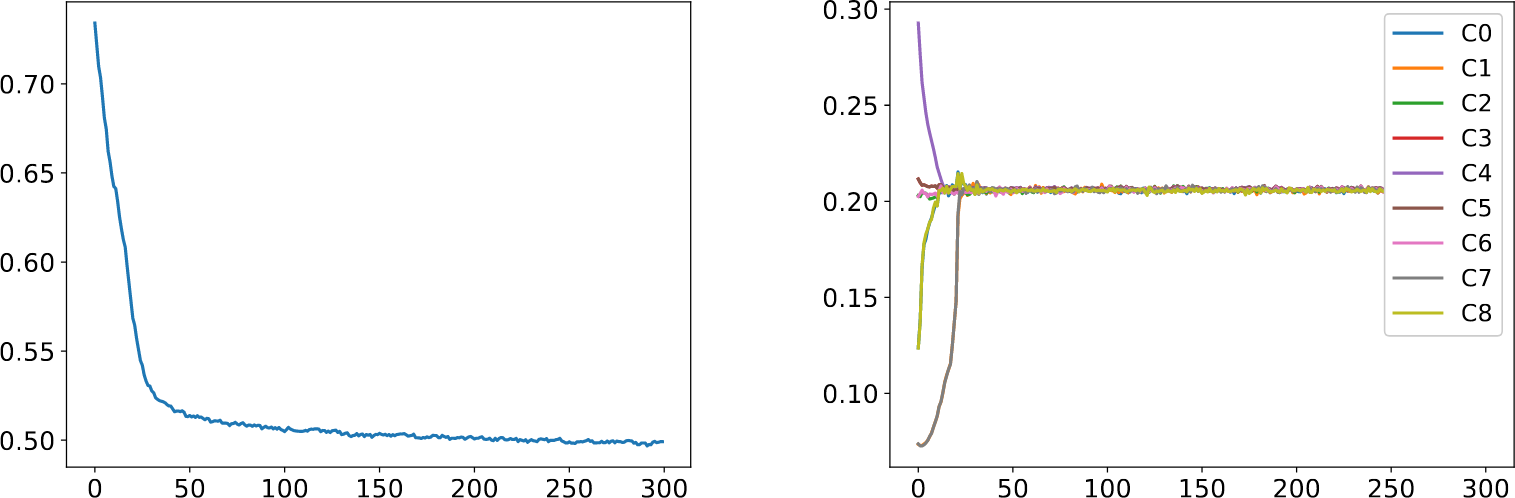

Convergence histories for the nine load test case: the objective function decreases even once the constraints are satisfied.